- Created by Kenn Knowles , last modified by Kai Cowger on Apr 25, 2024

-

Translate page

You are viewing an old version of this content. View the current version.

Compare with Current View Version History

« Previous Version 194 Next »

The Data Export Tool does not change your data in CommCare. It simply exports the data into a raw format that you can use for data insights and analytics using the CommCare API to export the data. The configuration for what data is exported and how it is formatted are provided to the tool via an Excel configuration file. The export can be in the format of a file (Excel, CSV, or JSON) or to a specified database. For this reason, using the DET requires knowledge of both Python and SQL, and for this reason, is an advanced feature.

Important note about PHI: Please note that the commcare-export tool offers no security features for dealing with Protected health information (PHI) directly. If you intend to export PHI data from CommCare, please ensure the appropriate measures are in place to protect your data.

https://www.loom.com/share/c0483859d68a4eb184059142489292fdPage lookup error: page "[Internal] CommCare Public Management" not found.

If you're experiencing issues please see our Troubleshooting Guide.

Page lookup error: page "[Internal] CommCare Public Management" not found.

If you're experiencing issues please see our Troubleshooting Guide.

Table of Contents

DET Example Use Case

Haik is a data analyst for a child immunization NGO specializing in tracking polio vaccinations in developing countries using CommCare. The increase of vaccination supplies and the training of competent healthcare workers to administer the vaccinations have significantly improved the child immunization program. More children are getting the vaccinations that they need.

The program's success is leading to a considerable increase in the data collected.

Haik needs to export data regularly to analyze and visualize it for the weekly reports. Manually exporting the data from CommCare HQ is not sustainable. Haik needs a way to export data in bulk in an automated manner.

The CommCare Data Export Tool will help Haik. He can use the DET to pull data from one or more domains and combine data into one large data file, or database, for analysis. Below is an example dashboard that Haik may make with DET.

Technical Requirements

Using the DET requires knowledge of both Python and SQL, and for this reason, is an advanced feature. You a can learn more below about the requirements for using both of these tools.

Python

More information about setting up Python for the DET.

To start, the DET requires you to download Python to your computer. You will use a command-line interpreter tool to run the commcare-export script from the Python script library. You need Python installed to use the commcare-export script. The script library is installed with Python. Installing the script allows the Data Export Tool to export your data from CommCare HQ.Below is a video walking through this process.

The Excel Query File

Read more about about setting up the Query file for the DET.

Overview

A Query file is an Excel file (.xlsx) saved to the Python Scripts folder.

The Query file specifies which data objects should be requested from the API and which values should be stored when the API payload is received. All other values that are not specified are discarded and not stored in the results set.

One query file can contain many tabs and can combine case and form data queries.

It’s common to have a single query file with one tab for each case or form that you want to export.

A template query file is provided here for cases and forms.

You must provide the path to where the query file is saved in the commcare-export command.

E.g.,commcare-export --query /home/username/Documents/my-query-file.xlsx

Below is more information about this process.

Quick Start

The easiest way to get started with the Data Export Tool is to have CommCare generate a query file for your data models based on an export. See https://dimagi.atlassian.net/wiki/x/JCrKfw for details.

If you prefer to start from scratch, We have provided a template query file here which can be used to build your query files.

See below for more details on the terminology and fields:

Data Source: (Required)

This determines whether you are exporting case, form, or messaging-event data.Filter Name: (Optional)

When you include the “Filter Name” and “Filter Value” columns, you can limit the set of forms or cases returned when you run the DET.Forms: By default, if your data source is “form”, every form submitted to a project space will be returned. To select a particular form type, set “Filter Name” to xmlns.exact and “Filter Value” to your form's XML namespace.

Cases: If your data source is “case”, every case in a project will be returned. To download one particular case type, set “Filter Name” to type and “Filter Value” to the name of the case type you'd like to download. See additional case filtering fields here: https://dimagi.atlassian.net/wiki/x/NDHKfw.

Filter Value: (Optional)

Filter Values determine which forms or cases will be processed and stored.Forms: When exporting forms, you can find the XML namespace for a form by going to your form > Advanced > View (Source XML) and look for the address in the header of your XForm.

See additional form filtering options here: https://dimagi.atlassian.net/wiki/x/VjHKfw. Filter Names are paired with Filter Values to filter the results you request from CommCareHQ. Results are limited to the set of forms or cases whose Filter Name property matches the value listed in this field.Cases: When exporting cases, the Filter Value is the Case Type listed on your case's settings page. You can pull all cases and store them in the same table or filter one case type at a time into a specific table.

Field: (Required)

Each row of this column specifies the name of one output field.

Note that a field named “id” is required for use with a SQL database.

Note that there is no maximum number of output fields.Source Field: (Required)

Each row of this column specifies where the data of the corresponding Field should be extracted from. Note that form properties must be prefixed with “form”. and case properties must be prefixed with “properties”.

(See more details about the proper format for Source Fields below in the How to Reference Fields in Forms and Cases section).Map Via: (Optional)

This is only relevant if you're exporting to SQL database.

This column allows you to convert strings to other types, e.g.:Str2date converts to datetime column

Str2num converts to integer column

Table Name: (Optional)

By default, the table name is taken as the Excel Sheet name. This can be overridden by adding this column with a single value in the column for the table name.

Including referenced items in the results may significantly decrease the tool's performance due to the additional data that is being requested, which must be fetched separately from the main form or case data.

Advanced Usage

Data Types

When exporting to a SQL database you can also explicitly set data types by adding a column with the heading "Data Type". See Data Types in https://dimagi.atlassian.net/wiki/x/cgrKfw.

Data Source

Advanced Usage: You may specify a deep JSONPath into the data to iterate over repeats. For example, if you have a repeat called "child" in the root of the form, the data source would become "form.form.child". Also, note that fields are relative to the source, not absolute. See the DET Tips and Tricks page for more information.Alternate Source Field [N]: (Optional)

In instances where different source fields should be placed in the same output field (for example, when renaming fields in the app), alternate source fields can be specified. Each Alternate Source Field.

The column must be named Alternate Source Field [N] where "[N]" is replaced by a number. For example:

Source Field | Alternate Source Field 1 | Alternate Source Field 2 |

dob | date_of_birth | date_of_birth |

The source fields are each considered in order, and the first one that appears in the data is used (even if its value is empty).

Creating an Excel Query File in CommCare HQ

The easiest way to create a query file is to have it generated by CommCare.

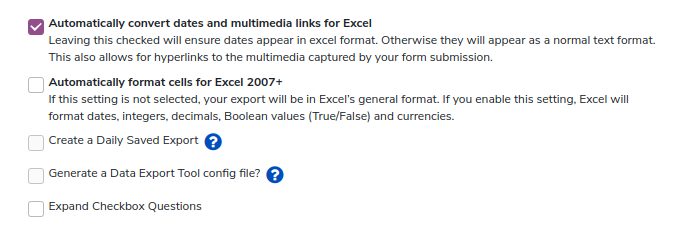

Any Export from the Data → Export Form/Case Data tab in CommCare can be used as a basis for an Excel query file. To generate a query file for any export, check the box that says "Generate a Data Export Tool config file".

Then, on the exports list page, a button labeled "DET Config" with a download icon will appear with the option to download the configuration file. This file will be pre-configured with the right filters and properties to export the selected data.

Mappings and Tips

The following mappings are used to convert from the export to the query file:

The "sheet name" from the export will be used as the table name in the config file. It is recommended to edit this from the default "Cases" and "Forms" to something more meaningful like "pregnant_women" or "household_registrations".

The "Display" property will be used as the SQL column name.

For best practices and maximum SQL compatibility is recommended to remove all special characters from both sheet names and "Display" properties, and to make the fields lowercase.

Known Limitations

The following limitations apply to any query files generated in this manner:

Any "special" transformations - for example, pulling an owner name from an owner_id are not yet supported. These values will be pulled down as the raw underlying data (e.g. the ID).

The "Parent Cases" sheet on case exports is not supported.

Nested repeat data in forms is not fully-supported.

How to reference Fields in Forms and Cases

One of the easiest ways to confirm form and case field names is by looking at the column name in a regular form/case export from CommCareHQ.

In general, the name of form and case fields matches those that you wrote when you created your application, with predictable modifications. When referencing properties in the Source Field column, ensure that you've typed them in exactly as they're defined in CommCare - case-sensitivity matters.

Top-Level Form Fields: A field named FIELD in a form is referenced by form.FIELD. For example, the field age is referenced by form.age in an Excel query file.

Top-Level Case Fields: A field name FIELD in a case is referenced by properties.FIELD. For example, the field delivery_date is referenced by properties.delivery_date in an Excel query file.

Nested Fields: A field nested more deeply within a form or case may be referenced by separating each level of nesting with a period. For example, in the demo_query_file_form.xlsx, you will see the nested field form.delivery_information.delivery_type, which will pull out the value of the delivery_type question inside the group delivery_information. This will always match the structure of your application.

(Power Users / Programmers Only) To find out exactly what fields are available, you must view the raw output of the CommCareHQ Case List API and Form List API. You can then reference these fields using an arbitrary JSONPath into the structure.

Aggregation and Calculation Functions

The following are functions that you can use to change the value of a column. They can be added to the Map Via column in the Excel query file.

Function name | Description | Example usage | Since version |

count-selected | Count the number of options selected for a multi-select value. | Value: a b c d Map Via: count-selected Output: 4 | 0.12.4 |

selected-at | Get the option at the given position for a multi-select value. Note: the position index is zero-indexed. This means that to choose the first item, you need to enter 0, the second item, 1, etc. | Value: red green blue Map Via: selected-at(2) Output: blue

| 0.12.4 |

selected | Checks to see if a value was selected from a multi-select value. Outputs 'True' or 'False'. | Value: rash fever Map Via: selected(fever) Output: True | 0.12.4 |

attachment_url | Converts a value into a URL that can be used to download attachments | Value: photo.jpg Map Via: attachment_url Output: https://www.commcarehq.org/a/my-domain/api/form/attachment/the-form-id/photo.jpg | 0.13.2 |

substr | Returns the substring indexed by [first arg, second arg), where indexes start from zero. Suitable for truncating long strings to fit a column's maximum length. | Value: abcdef Map Via: substr(2, 5) Output: cde | 1.3.2 |

form_url | Returns the URL to the form page on CommCare HQ (only relevant for form exports) | Value: (anything) Map Via: form_url Output: https://www.commcarehq.org/a/my-domain/reports/form_data/<FORM ID>/ | 1.4.0 |

case_url | Returns the URL to the case page on CommCare HQ (only relevant for case exports) | Value: (anything) Map Via: case_url Output: https://www.commcarehq.org/a/my-domain/reports/case_data/<CASE ID>/ | 1.4.0 |

format_uuid | Given a UUID this function returns a formatted HEX UUID. If the input value is not a UUID it will return a blank value. | Value: 00a3e0194ce1458794c50971dee2de22 Map Via: format_uuid Output: 00a3e019-4ce1-4587-94c5-0971dee2de22 | 1.4.0 |

sha1 | Create an SHA1 hash of the column value. | Value: abc Map Via: sha1 Output: 03cfd743661f07975fa2f1220c5194cbaff48451 | 1.2.0 |

unique | Output only unique values from a list | Source expression: messages[*].phone_number Value: ['+16355573', '+16355573', '+16355572'] Map Via: unique Output '+16355573,+16355572' | 1.7.3 |

Exporting User and Location Data

The --users and --locations options export data from a CommCare project that can be joined with form and case data. The --with-organization option does all of that and adds a field to Excel query specifications to be joined on.

Specifying the --users option or --with-organization option will export an additional table named 'commcare_users' containing the following columns:

Column | Type | Note |

id | Text | Primary key |

default_phone_number | Text |

|

Text |

| |

first_name | Text |

|

groups | Text |

|

last_name | Text |

|

phone_numbers | Text |

|

resource_uri | Text |

|

commcare_location_id | Text | Foreign key into the commcare_locations table |

commcare_location_ids | Text |

|

commcare_primary_case_sharing_id | Text |

|

commcare_project | Text |

|

username | Text |

|

The data in the 'commcare_users' table comes from the List Mobile Workers API endpoint.

Specifying the --locations option or --with-organization options will export an additional table named 'commcare_locations' containing the following columns:

Column | Type | Note |

id | Text |

|

created_at | Date |

|

domain | Text |

|

external_id | Text |

|

last_modified | Date |

|

latitude | Text |

|

location_data | Text |

|

location_id | Text | Primary key |

location_type | Text |

|

longitude | Text |

|

name | Text |

|

parent | Text | resource_uri of parent location |

resource_uri | Text |

|

site_code | Text |

|

location_type_administrative | Text |

|

location_type_code | Text |

|

location_type_name | Text |

|

location_type_parent | Text |

|

location level code | Text | Column name depends on the project's organization |

location level code | Text | Column name depends on the project's organization. |

The data in the 'commcare_locations' table comes from the Location API endpoint along with some additional columns from the Location Type API endpoint. The last columns in the table exist if you have set up organization levels for your projects. One column is created for each organizational level. The column name is derived from the Location Type that you specified. The column value is the location_id of the containing location at that level of your organization. Consider the example organization from https://dimagi.atlassian.net/wiki/x/NjPKfw. A piece of the 'commcare_locations' table could look like this:

location_id | location_type_name | chw | supervisor | clinic | district |

939fa8 | District | NULL | NULL | NULL | 939fa8 |

c4cbef | Clinic | NULL | NULL | c4cbef | 939fa8 |

a9ca40 | Supervisor | NULL | a9ca40 | c4cbef | 939fa8 |

4545b9 | CHW | 4545b9 | a9ca40 | c4cbef | 939fa8 |

location_id | location_type_name | chw | supervisor | clinic | district |

939fa8 | District | NULL | NULL | NULL | 939fa8 |

c4cbef | Clinic | NULL | NULL | c4cbef | 939fa8 |

a9ca40 | Supervisor | NULL | a9ca40 | c4cbef | 939fa8 |

4545b9 | CHW | 4545b9 | a9ca40 | c4cbef | 939fa8 |

In order to join form or case data to 'commcare_users' and 'commcare_locations', the exported forms and cases need to contain a field identifying which user submitted them. The --with-organization option automatically adds a field called 'commcare_userid' to each query in an Excel specification for this purpose. Using that field, you can use a SQL query with a join to report data about any level of your organization. For example, to count the number of forms submitted by all workers in each clinic:

Count forms by clinic

SELECT l.clinic,

COUNT(*)

FROM form_table t

LEFT JOIN (commcare_users u

LEFT JOIN commcare_locations l

ON u.commcare_location_id = l.location_id)

ON t.commcare_userid = u.id

GROUP BY l.clinic;

Note that the table names 'commcare_users' and 'commcare_locations' are treated as reserved names and the export tool will produce an error if given a query specification that writes to either of them.

The export tool will write all users to 'commcare_users' and all locations to 'commcare_locations', overwriting existing rows with current data and adding rows for new users and locations. If you want to remove obsolete users or locations from your tables, drop them, and the next export will leave only the current ones. If you modify your organization to add or delete levels, you will change the columns of the 'commcare_locations' table, and you will likely want to drop the table before exporting with the new organization.

Exporting Messaging Event Data

As of version 1.7.3, data from CommCare's SMS messaging events can be exported using the Data Export Tool.

To export data, change the "Data Source" to "messaging-event".

Column header | Value |

Data Source | messaging-event |

Filter Name | Any of the filters (other than date and limit) can be applied |

Filter Value | The values you would use with the API |

Source Field | The fields in the API response e.g. content_type, recipient.name, messages[*].phone_number, messages[*].email_address messages[*].date |

Field | The user-defined name of the field in the output (e.g. Excel / table column name) |

Example Query File

API Fields

Please see here for the full list of messaging event API response fields.

Exporting One Row Per Message

When "Data Source" is set to "messaging-event" the export will contain one row per messaging event. Since each messaging event may have more than one message, this may result in multiple messages per row, which can be difficult to analyse.

To export one row per message, change "Data Source" to "messaging-event.messages.[*]". This will result in each message being exported to its own row.

When "Data Source" is set in this way, you can access data from the parent messaging event by prefixing the fields with '$.'

Source Field | Field | Data Source | Filter Name | Filter Value |

|---|---|---|---|---|

id | id | messaging-event.messages.[*] | content_type | sms |

$.content_type | content_type |

|

|

|

$.status | event_status |

|

|

|

status | message_status |

|

|

|

$.date | event_date |

|

|

|

date | message_date |

|

|

|

phone_number | phone_number |

|

|

|

email_address | email_address |

|

|

|

content | message_content |

|

|

|

$.recipient.recipient_id | recipient_id |

|

|

|

$.recipient.type | recipient_type |

|

|

|

$.recipient.name | recipient_name |

|

|

|

Note: if there are no messages then the event will not be exported at all. To work around this, you can use the “--export-root-if-no-subdocument” command line option which will result in a single row being exported for the event if there are no messages.

Additional Information

For information on the message event itself and its data fields, please see API at https://dimagi.atlassian.net/wiki/x/6jHKfw.

Exporting Data

You have the choice to export to a file(Excel, CSV, JSON, etc.) or a SQL database. Exporting to Excel files and Markdown is primarily used for testing. Exporting to SQL is more commonly used.

You can specify batch sizes by adding a --batch-size=number at the end of your command.

“Checkpoints” enable incremental exports. A checkpoint will get set after each batch of data that is fetched from the API. The next export will start at the last checkpoint. Checkpoints are only created when exporting to a SQL database.

Export to an Excel file

Exporting to an Excel file allows you to get a full results-set without setting up a local database. When you export to Excel, there are no checkpoints. It’s all or nothing. If the query fails, the Excel file is not created.

You can run a data export and save it to an Excel file with the DET. Excel files are great for one-time data exports or if you want to use them to analyze and visualize the data. Excel is also a great option if you are not familiar with working with databases.

The downside to Excel files is that they can’t handle large datasets. You can also only do once-off export to an Excel file; there are no “checkpoints” created for incremental updates when the DET is run.

Note: You can specify batch sizes by adding a --batch-size=number at the end of your command.

When exporting to Excel, the DET will fetch data from the API in batches, and then when the final batch is processed, the output will be written to the Excel file.

Export to a Database

Exporting to a database supports incremental updates. When you export to a database, you can have millions of rows of data, so the DET handles it in batches.

After each successful batch, the tool writes a checkpoint to a database table that the DET maintains.

By default, each time the commcare-export command runs, it will only import results that were modified after the most recently imported form or case. This means that, for very large datasets, you do not need to export a full set of results every time.

This can be an incredibly powerful feature for a data pipeline. (See more information at https://dimagi.atlassian.net/wiki/x/BibKfw).

You can configure the DET to pull data on a schedule to automatically keep your data up to date or run it as a one-time data dump.

Exporting to SQL allows you to clean and manipulate the data before you integrate it with data visualization tools like Power BI or Tableau.

If you are looking to export into a SQL database, you must have this database set up, and you should know how to provide a URL for it. The DET will create the necessary tables and columns in the SQL database based on the properties contained in the query file.

By choosing "--output-format sql" and "--output DATABASE_URL" in your command, you can save the output to a SQL database.

The term DATABASE_URL is a standard URL format for databases in these commands. If, for example, you have a PostgreSQL database called “registrations” that is hosted on db.example.com that you would like to save into instead, the URL might look like postgresql://db.example.com/registrations (you may need to include username and password in the URL).

Checkpoints

The first time you run a DET query to a database, it will get all the records that exist and then set the checkpoint at the end.

The next time you run the query, it will just start from the last checkpoint and get all the cases modified after that time or forms submitted after that time.

This checkpoint data is stored in a table called “commcare-export-runs” in the database.

If you change the query file, the DET will know that it’s a new query file because the MD5 hash will not match. This triggers a full refresh of all data in the domain. See https://dimagi.atlassian.net/wiki/x/OTDKfw for more information.

A checkpoint will get set after each batch of data that is fetched from the API. Using the --batch-size=number command-line argument will change how much data is fetched in each batch and, therefore, the frequency of the checkpoints.

Technical information for exporting to SQL

Note that a field named id is required for use with a SQL database.

Exporting to SQL requires installing additional python libraries. These are listed in the table below.

Supported Databases

Database name | URL format | Maximum field name length | Dependencies |

MSSQL | mssql+pyodbc://<username>:<password>@<host>/<database name>?driver=ODBC+Driver+18+for+SQL+Server

| 128 | Requires the 'pyodbc' library: pip install pyodbc Also requires the SQL Server drivers to be installed. |

Azure MSSQL | mssql+pyodbc:///?odbc_connect=<quoted connection string> (see: https://dimagi.atlassian.net/wiki/x/lErKfw ) | 128 | Same as MSSQL above |

MySQL | mysql+pymysql://<username>:<password>@<host>/<database name>?charset=utf8mb4

| 64 | Requires the 'pymysql' library: pip install pymysql

|

Oracle | oracle+cx_oracle://<username>:<password>@<host>/<database name>

| 128 | Requires the 'cx_oracle' library: pip install cx_oracle |

PostgreSQL | postgresql://<username>:<password>@<host>/<database name>

| 63 | Requires the 'psycopg2' library: pip install psycopg2 |

Data types

Data Type Detection

By default, the data export tool will choose the data type of the SQL column based on the incoming data. Strings, integers, and boolean values are supported.

Columns are created dynamically when data is received, and the types of columns may be updated dynamically as new data comes in to make it compatible. This means that until the export tool sees a non-null value for the column, it will not create the column in SQL.

You can also use the command-line option "--strict-types" which will prevent SQL column types from being changed. This may result in errors if incompatible data is received from CommCare HQ.

Explicit Data Types

You can also explicitly set data types by adding a column with the heading "Data Type". Allowed values for this column are:

Value |

text |

boolean |

date |

datetime |

integer |

json (Postgres Databases only) |

If you specify a data type column, then the column will be created when the table is first created instead of dynamically based on data. Note that if you use explicit data types, you are responsible for ensuring the data going into the database is valid for that column. Specifying these conversions may be necessary for some data types - especially when converting string/text values to other types.

Data Type Conversion

To convert data of one type to another type before it goes into the database, you can provide the following functions in the Map Via column:

Function name | Description |

str2bool | Convert a string value to a boolean. The following values result in a True output: 'true', 't', '1' (case doesn't matter) If the value is already a boolean, it will be output without change. All other values result in a False output. |

bool2int | This supports converting boolean values and booleans represented as strings to the integers 1 or 0. |

str2num | Convert a string representation of a number to a number. |

str2date | Convert a string representation of a date or timestamp to a date or timestamp. |

Export to Markdown

Exporting to “Markdown” gives you the results in the command-line. Markdown is primarily used for testing.

Generating Database Connection Strings

Azure MSSQL

Microsoft Azure connection strings take the following form:

mssql+pyodbc:///?odbc_connect=<quoted connection string>

The <quoted connection string> value is difficult to generate by hand as it requires escaping many characters. To generate a connection string for Azure databases, it is recommended to use the following Python code:

Generating a connection string

from urllib.parse import quote_plus

# change these values to match your Azure database

server = 'tcp:your-azure-server.database.windows.net'

database = 'your-database-nametest'

username = 'yourusername'

password = '***'

# these should not usually need to change

driver = '{ODBC Driver 18 for SQL Server}'

port = 1433

odbc_str = f'DRIVER={driver};SERVER={server};PORT={port};DATABASE={database};UID={username};PWD={password}'

sqlalchemy_str = 'mssql+pyodbc:///?odbc_connect=' + quote_plus(odbc_str)

print(sqlalchemy_str) # use this value in the data export tool

# optionally test your connection

from sqlalchemy import create_engine

engine = create_engine(sqlalchemy_str)

query = "select * from <table_name>"

print(engine.execute(query).fetchall())

Authentication when using DET

Two sets of credentials are required:

CommCareHQ credentials: a Username and an API Key.

Database credentials (if outputting to a database). The database user must have access to create new tables, create new columns in those tables, and read and edit data.

Click below for more information.

CommCare HQ Authentication Requires an API Key

API Key authentication method is required. Each user must create their own API key and store it securely.

API Keys can be scoped (restricted) to a domain or a set of domains

API Keys can also be set to an Allow-List IP Address or list of IP Addresses.

IP Ranges are not permitted. Each individual IP address will need to be named. If you leave this blank, API requests from all IP addresses in the world will be allowed.

Create an API key: https://www.commcarehq.org/account/api_keys/

API keys belong to individual web users. Each user who wants to run a Data Export Tool query will need their own API key.

Enterprise Domains

When using domains that are linked to a main account, whose permissions inherit from the main account, the user doesn’t need to accept the invitation on all the domains.

Security Recommendations

When using domains that are linked to a main account, whose permissions inherit from the main account, the user doesn’t need to accept the invitation on all the domains.

Store API Key name and password in a password manager.

Do not share your API key with anyone.

Do not email it to yourself or store it in any document that is not password-protected.

Rotate keys: Create new API Keys each month and name the API Key by the month.

Referencing API Key From Command Line

To use API Key authentication in Data Export Tool from the command line, the following two parameters are required:

--auth-mode: "apikey"

--username: set to the web user's email (e.g. username@email.com)

--password: set to the API Key generated above

An example call might look like:

C:\Python39\Scripts>commcare-export --output-format xlsx --output YOUR_OUTPUT_FILE_NAME.xlsx --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx --username username@email.com --auth-mode apikey --password ***********YOUR_API_KEY**************

Installing and Upgrading DET

The DET can be run by either:

Downloading the DET executable file (recommended for general users)

Installing the package through python (for the more tech-savvy)

The section below explores both options

Download the DET executable

The latest DET executable file can be downloaded from the github releases page by looking under the “Assets” section of the latest release. Both Windows and Linux machines are supported, but you have to download the correct executable for the appropriate operating system.

The latest DET executable file can be downloaded from the github releases page by looking under the “Assets” section of the latest release. Both Windows and Linux machines are supported, but you have to download the correct executable for the appropriate operating system.

Windows executable

The Windows executable file is called commcare-export.exe. You can download the file to any place on your system and run it from there in the same way that you would run the commcare-export command, with one exception being that you have to run the file as you would run any executable file on Windows, for example

.\commcare-export <options>

That’s it! No installation needed!

The only thing to keep in mind is that you have to specify the path to the query file correctly if you don’t have the DET executable in the same location as the query file.

Linux binary

The Linux binary file is simply called commcare-export. After having downloaded the DET binary file you have to make sure the file has the necessary executable permission. You can check this by running

test -x <path-to-file> builds.txt && echo true || echo false

If the file is not executable the terminal will show “false”, in which case you can simply give the file the necessary executable permission by running

chmod +x <path-to-file>

Now you can simply run the DET as you would any other binary file

./commcare_export <options>

Installing DET through the package

This is for more tech-savvy users who have a fair level of comfort with using the command line and working with python packages.

When using the DET, these are the basic steps to follow:

Download and install Python.

Install a Python package manager (we recommend pip).

Install the ComCare Data Export tool.

Create an Excel Query File.

Write a command-line query to run the export.

(Optionally) Configure export to be re-run automatically.

Use data.

Install DET on Windows

The Data Export tool is installed by using the pip install commcare-export command.

Install Python

Visit the Python for Windows page and download the latest version of Python.

Install pip

Installation is straightforward if your local machine has a recent version of Python installed because the package installer, pip, will already be installed.

You can check whether pip is installed by running the following in the command-line interpreter:

C:\Python39>python -m pip --version

Install commcare-export

C:\Python39\scripts>pip install commcare-export

Upgrade commcare export

C:\python39\scripts>pip install -U commcare-export

Install DET on MacOS

The Data Export tool is installed by using the pip install commcare-export command.

Install Python

For MacOS make sure that your Python installation is upgraded to the latest version. The best way to install software on a Mac is via Homebrew. You need to open a terminal and run two scripts to install Homebrew and the latest version of Python.

Open a terminal and run the following two commands.

The first script installs Homebrew.

The second script installs Python and any prerequisites.

/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)" brew install python

For Homebrew, use the following:

brew install <SOME PACKAGE>.

Install commcare-export

pip2 install commcare-export

Upgrade commcare-export

sudo pip2 install -U commcare-export

NOTE: If you have any problems setting up Python on MacOS, use this reference:

http://docs.python-guide.org/en/latest/starting/install/osx/

Install DET on Linux

The Data Export tool is installed by using the pip install commcare-export command.

Install Python

If you are on a Linux-based operating system, you probably have an automatic package manager available. You probably already have Python installed, but if not, then you will need to invoke the package manager for your operating system. Here are some possible commands to run in the command-line interpreter:

$ apt-get install python

or

$ yum install python

or

$ emerge python

Install pip

$ sudo easy_install pip

Install commcare-export

$ sudo pip install commcare-export

Upgrade commcare-export

$ sudo pip install -U commcare-export

NOTE: If you get an error "ValueError: Invalid column index 0" you should run this command to downgrade one of the python libraries:

$ pip install -U openpyxl==1.8.6

Run a CommCare Export

Once you have all the components ready, you can run the commcare-export command.

Components needed to run the DET:

The DET installed on your computer

A project space with an application that contains data.

A query file for the DET to tell it what data to export.

The Necessary credentials for CommCare HQ (Username and API key)

If exporting to a database, the necessary credentials for the database you choose to use.

Optional commcare-export flags

For a full list of flags, you can run commcare-export --help.

argument | possible values |

--since | Provide the DATE value in YYYY-MM-DD format. This will export all data after this date. |

--until | Provide the DATE value in YYYY-MM-DD format. This will export all data before this date. |

--verbose | Includes a more detailed output to the user. |

--verbose 2> logs.txt | This flag exports log files to a text file. |

--verbose 2>> logs.txt | Append logs to an already existing log file. |

--missing-value | Override the default value (null) that is used whenever data is missing from the form/case. |

--api-version | Specify the version of the CommCare API to use for exports. Defaults to v0.5. |

--start-over | Ignore any checkpoints that may exists and re-export ALL data. |

--strict-types | This won’t allow changing column types once they are created when saving to a SQL database. |

--version | Output the version of commcare-export that you're using (useful for debugging). |

--auth-mode | Use "digest" auth, or "apikey" auth (for two factor enabled domains). |

Tips:

OUTPUT-FORMAT refers to the format in which you want to export the data.

YOUR_PROJECT refers to the name of your project space, not the entire domain URL. For instance, the URL would be https://www.commcarehq.org/a/YOUR_PROJECT. For the tool, type in YOUR_PROJECT.

If you are using a domain that isn't stored on www.commcarehq.org, then you will need to use the "--commcare-hq YOUR_URL" flag in the command prompt.

YOUR-QUERY_FILE_NAME.xlsx refers to your query file that is saved in the scripts folder of your Python installation.

YOUR_OUTPUT_FILE_NAME.xlsx refers to the file that will contain the exported data. This file will also be saved in the scripts folder of your Python installation.

Export using Windows

Run the following commands in your command line interpreter to export data from CommCare HQ:

Markdown

C:\Python39\Scripts>commcare-export --output-format markdown --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx

Excel

C:\Python39\Scripts>commcare-export --output-format xlsx --output YOUR_OUTPUT_FILE_NAME.xlsx --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx --username username@email.com --auth-mode apikey --password ***********YOUR_API_KEY**************

Database

C:\Python39\Scripts>commcare-export --output-format sql --output DATABASE_URL --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx

Export using MacOS

Run the following commands in your command line interpreter to export data from CommCare HQ:

Markdown

$ commcare-export --output-format markdown --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx

Excel

$ commcare-export --output-format xlsx --output YOUR_OUTPUT_FILE_NAME.xlsx --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx --username username@email.com --auth-mode apikey --password ***********YOUR_API_KEY**************

Database

$ commcare-export --output-format sql --output DATABASE_URL --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx

Export using Linux

Run the following commands in your command line interpreter to export data from CommCare HQ:

Markdown

$ commcare-export --output-format markdown --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx

Excel

$ commcare-export --output-format xlsx --output YOUR_OUTPUT_FILE_NAME.xlsx --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx --username username@email.com --auth-mode apikey --password ***********YOUR_API_KEY**************

Database

$ commcare-export --output-format sql --output DATABASE_URL --project YOUR_PROJECT --query YOUR_QUERY_FILE_NAME.xlsx

DET Tips and Tricks

Below are some tips and tricks for your DET!

Export repeat groups

Set the "Data Source" to be the path of your repeat group, e.g., form.form.question_group.repeat_group[*]

Reference the questions directly i.e. instead of "form.question_goup.repeat_group.question1" you should use "question1"

Reference root properties of the form with: $

e.g. $.form.@name, $.id

Reference the id of the repeat group with: id

Data Source | Filter Name | Filter Value | Field | Source Field |

|---|---|---|---|---|

form.form.path.to.repeat[*] |

|

| form_id | $.id |

|

|

| form_name | $.form.@name |

|

|

| repeat_question1 | repeat_question1 |

|

|

| repeat_question2 | repeat_question2 |

Export a case or form in JSON representation

Set the "Data Source" to "form" or "case", depending on what is supposed to be extracted.

Set the "Source Field" to "[*]"

Data Source | Filter Name | Filter Value | Field | Source Field |

|---|---|---|---|---|

form |

|

| form_id | id |

|

|

| json_doc | [*] |

In this query file, we are not applying any filters. Considering that the "Data Source" is generic, this would include all forms or cases regardless of their type.

Export one row for each case that was altered by a form

Use "form.form..case" as the data source

Reference case properties directly, e.g., @case_id

Reference root properties of the form with '$' e.g. $.domain, $.form.@name

Data Source | Filter Name | Filter Value | Field | Source Field |

|---|---|---|---|---|

form.form..case |

|

| case_id | @case_id |

|

|

| form_name | $.form.@name |

|

|

| user_id | $.metadata.userID |

|

|

| form_id | $.id |

Determine if a case was created, update, or closed by a form

Use the technique above to export one row for each case in the form

Reference the 'create', 'update', and 'close' fields in the case and map them via the 'bool' mapping

This will produce a 'True' or 'False' value in the output.

Data Source | Filter Name | Filter Value | Field | Source Field | Map Via |

|---|---|---|---|---|---|

form.form..case |

|

| form_id | $.id |

|

|

|

| case_id | @case_id |

|

|

|

| created | create | bool |

|

|

| updated | update | bool |

|

|

| closed | close | bool |

Determine if a form was submitted from a mobile phone or via some other means (API, bulk upload, Cloudcare, etc.)

Reference the 'is_phone_submission' property of the form.

Data Source | Filter Name | Filter Value | Field | Source Field |

|---|---|---|---|---|

form |

|

| form_id | id |

|

|

| form_name | form.@name |

|

|

| is_phone_submission | is_phone_submission |

Convert output values based on a mapping

Available in version 0.12.5 and above. |

You may want to convert an output value to something else by doing a 'lookup' in some instances. For example:

Convert this output | → To this output | ||||||

|---|---|---|---|---|---|---|---|

|

|

To do this, we need to add a 'Mappings' sheet to our configuration file, which contains the lookups for the fields we want to convert.

Here is our configuration sheet:

And here we've added a new sheet called "Mappings", which contains 3 columns:

Mapping Name - this must match the name of a "Field" in the main configuration sheet

Source - this is the value that you want to convert from

Destination - this is the value that you want to convert to

Here is the Mapping sheet from our example:

Exporting the form_link

form_link currently isn't available to be exported as a source field. In order to get the form_link, you can write the following expression in the "Map Via" Column: template(https://www.commcarehq.org/a/{}/reports/form_data/{}/, $.domain, $.id) (see screenshot below).

Data Source | Filter Name | Filter Value | Field | Source Field | Map Via |

|---|---|---|---|---|---|

form |

|

| form_id | id |

|

|

|

| form_link | form_link | template(https://www.commcarehq.org/a/{}/reports/form_data/{}/, $.domain, $.id) |

Export the Parent Case's ID

When exporting cases, if you need the parent's case_id or case_type, use the following source field:

Data Source | Filter Name | Filter Value | Field | Source Field |

|---|---|---|---|---|

case |

|

| id | id |

|

|

| parent_id | indices.parent.case_id |

|

|

| parent_case_type | indices.parent.case_type |

Export the Host Case's ID

When exporting cases, if you need the host's case_id or case_type, use the following source field:

Data Source | Filter Name | Filter Value | Field | Source Field |

|---|---|---|---|---|

case |

|

| id | id |

|

|

| host_id | indices.host.case_id |

|

|

| host_case_type | indices.host.case_type |

DET Advanced Features

Below are some advanced features. Please note that these features only apply to SQL exports.

Show export history

This command can be used to view the history of a particular export:

$ commcare-export-utils history --project X --query A.xlsx --output [SQL URL] | Checkpoint Time | Batch end date | Export Complete | Project | Query Filename | Query MD5 | Key | CommCare HQ | | -------------------------- | ------------------- | --------------- | ------- | ------------------- | -------------------------------- | --- | ----------- | | 2018-08-29T15:18:04.299947 | 2014-08-30T04:30:48 | True | X | A.xlsx | 9043f06f8cb4b26871ebd939609ca01e | | prod | | 2018-08-29T15:18:01.553475 | 2014-08-29T09:45:32 | False | X | A.xlsx | 9043f06f8cb4b26871ebd939609ca01e | | prod | | 2018-08-29T15:17:59.300238 | 2014-08-28T05:13:17 | False | X | A.xlsx | 9043f06f8cb4b26871ebd939609ca01e | | prod | | 2018-08-29T15:17:57.151183 | 2014-08-27T14:36:38 | False | X | A.xlsx | 9043f06f8cb4b26871ebd939609ca01e | | prod | | 2018-08-29T15:17:54.914646 | 2014-08-26T15:41:11 | False | X | A.xlsx | 9043f06f8cb4b26871ebd939609ca01e | | prod | | 2018-08-29T15:17:52.771408 | 2014-08-25T21:46:47 | False | X | A.xlsx | 9043f06f8cb4b26871ebd939609ca01e | | prod |

User defined export key for checkpointing

Normally the export tool uses the MD5 hash of the query file as the checkpoint key i.e. when starting up it will query the database using the MD5 hash to find the last checkpoint time.

This means that if the query file is changed the MD5 will also change the the tool will not be able to find the last checkpoint which will cause it to re-export all the data. In most cases this is what's desired since change to the query file require re-processing the data however in certain scenarios it may be more desirable to continue using the previous checkpoints.

For example, if a new column is added to a form export query file which represents a new field in the form, it is not necessary to re-process all the old data since that field doesn't exist anyway.

To accommodate these use cases the export tool can be made to ignore the MD5 hash of the query file and instead use a key supplied on the command line:

$ commcare-export --project X --query A.xlsx --output [SQL URL] --checkpoint-key my-key ...

Note that when running with a user supplied key the export tool will never consider the MD5 hash and therefore any re-processing of older data will need to be handled manually.

Converting a non-keyed export to a keyed export

To convert an export that has been using the MD5 hash as the key into one that uses a custom key run the following command:

$ commcare-export-utils set-key --project X --query A.xlsx --output [SQL URL] --checkpoint-key my-key

Note that this will use the MD5 hash of the query file to find the checkpoints that need to updated.

Saving data as JSON

This feature is only available on Postgres databases.

You can save data as a "JSON" column. This is particularly useful when attempting to export all form or case data regardless of which fields are present - for example, if you are trying to create a simple data lake for your CommCare data.

To export all form contents as JSON, create a column in a case export file with a "Source Field" of "form" and a data type column set to "json".

To export all case properties as JSON, create a column in a case export file with a "Source Field" of "properties" and a data type column set to "json".

This data can then be queried using Postgres JSON queries. For example:

select form->'@name' as form_name, form->'meta'->'username' as username, form->'meta'->'timeStart' as time_start from form_lake limit 5; form_name | username | time_start -------------+-------------------+------------------------------- "New Event" | "alice" | "2017-06-24T16:24:07.676000Z" "New Event" | "bob" | "2017-07-02T13:43:52.655000Z" "New Event" | "chelsea" | "2017-07-04T07:51:12.528000Z" "New Event" | "daniel" | "2017-07-09T06:10:48.591000Z" "New Event" | "edith" | "2020-04-02T05:58:11.712000Z"

- No labels